FUSE over io_uring

Over the past few months I had the chance to spend some time looking at an interesting new FUSE feature. This feature, merged into the Linux kernel 6.14 release, has introduced the ability to perform the communication between the user-space server (or FUSE server) and the kernel using io_uring. This means that file systems implemented in user-space will get a performance improvement simply by enabling this new feature.

But let's start with the beginning:

What is FUSE?

Traditionally, file systems in *nix operating systems have been implemented within their (monolithic) kernels. From the BSDs to Linux, file systems were all developed in the kernel. Obviously, the exceptions already existed since the beginning as well. Micro-kernels, for example, could be executed in ring0, while their file systems would run as servers with lower privileged levels. But these were the exceptions.

There are, however, several advantages in implementing them in user-space instead. Here are just a few of the most obvious ones:

- It's probably easier to find people experienced in writing user-space code than kernel code.

- It is easier, generally speaking, to develop, debug, and test user-space applications. Not because kernel is necessarily more complex, but because kernel development cycle is slower, requiring specialised tools and knowledge.

- There are more tools and libraries available in user-space. It's way easier to just pick an already existing compression library to add compression in your file system than having it re-implemented in the kernel. Sure, nowadays the Linux kernel is already very rich in all sorts of library-like subsystems, but still.

- Security, of course! Code in user-space can be isolated, while in the kernel it would be running in ring0.

- And, obviously, porting a file system into a different operating systems is much easier if it's written in user-space.

And this is where FUSE can help: FUSE is a framework that provides the necessary infrastructure to make it possible to implement file systems in user-space.

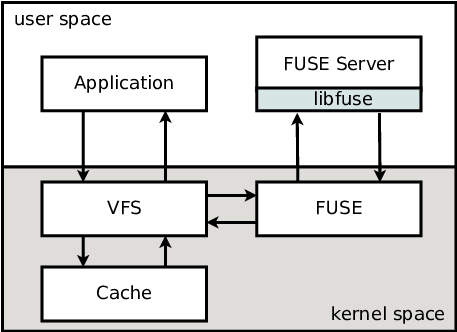

FUSE includes two main components: a kernel-space module, and a user-space

server. The kernel-space fuse module is responsible for getting all the

requests from the virtual file system layer (VFS), and redirect them to

user-space FUSE server. The communication between the kernel and the FUSE

server is done through the /dev/fuse device.

There's also a third optional component: libfuse. This is a user-space

library that makes life easier for developers implementing a file system as it

hides most of the details of the FUSE protocol used to communicate between user-

and kernel-space.

The diagram below helps understanding the interaction between all these components.

As the diagram shows, when an application wants to execute an operation on a FUSE file system (for example, reading a few bytes from an open file), the workflow is as follows:

- The application executes a system call (e.g.,

read()to read data from an open file) and enters kernel space. - The kernel VFS layer routes the operation to the appropriate file system

implementation, the FUSE kernel module in this case. However, if the

read()is done on a file that has been recently accessed, the data may already be in the page cache. In this case the VFS may serve the request directly and return the data immediately to the application without calling into the FUSE module. - FUSE will create a new request to be sent to the user-space server, and

queues it. At this point, the application performing the

read()is blocked, waiting for the operation to complete. - The user-space FUSE file system server gets the new request from

/dev/fuseand starts processing it. This may include, for example, network communication in the case of a network file system. - Once the request is processed, the user-space FUSE server writes the reply

back into

/dev/fuse. - The FUSE kernel module will get that reply, return it to VFS and the user-space application will finally get its data.

As we can seen, there are a lot of blocking operations and context switches between user- and kernel- spaces.

What's io_uring

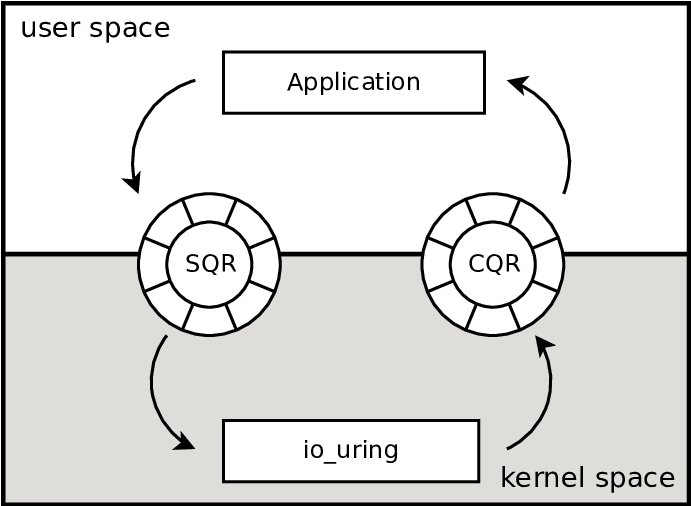

io_uring is an API for performing asynchronous I/O, meant to replace, for

example, the old POSIX API (aio_read(), aio_write(), etc). io_uring can be

used instead of read() and write(), but also for a lot of other I/O

operations, such as fsync, poll. Or even for network-related operations

such as the socket sendmsg() and recvmsg(). An application using this

interface will prepare a set of requests (Submit Queue Entries or SQE), add

them to Submission Queue Ring (SQR), and notify the kernel about these

operations. The kernel will eventually pick these entries, executed them and

add completion entries to the Completion Queue Ring (CQR). It's a simple

producer-consumer model, as shown in the diagram bellow.

What's FUSE over io_uring

As mentioned above, the usage of /dev/fuse for communication between the FUSE

server and the kernel is one of the performance bottlenecks when using

user-space file systems. Thus, replacing this mechanism by a block of memory

(ring buffers) shared between the user-space server and the kernel was expected

to result in performance improvements.

The implementation of FUSE over io_uring that was merged into the 6.14 kernel includes a set of SQR/CQR queues per CPU core and, even if not all the low-level FUSE operations are available through io_uring1, the performance improvements are quite visible. Note that, in the future, this design of having a set of rings per CPU may change and may become customisable. For example, it may be desirable to have a set of CPUs dedicated for doing I/O on a FUSE file system, keep other CPUs for other purposes.

Using FUSE over io_uring

One awesome thing about the way this feature was implemented is that there is no

need to add any specific support to the user-space server implementations: as

long as the FUSE server uses libfuse, all the details are totally transparent

to the server.

In order to use this new feature one simply needs to enable it through a fuse kernel module parameter, for example by doing:

echo 1 > /sys/module/fuse/parameters/enable_uring

And then, when a new FUSE file system is mounted, io_uring will be used. Note

that the above command needs to be executed before the file system is mounted,

otherwise it will keep using the traditional /dev/fuse device.

Unfortunately, as of today, the libfuse library support for this feature

hasn't been released yet. Thus, it is necessary to compile a version of this

library that is still under review. It can be obtained in the maintainer git

tree, branch uring.

After compiling this branch, it's easy to test io_uring using one of the passthrough file system examples distributed with the library. For example, one could use the following set of commands to mount a passthrough file system that uses io_uring:

echo 1 > /sys/module/fuse/parameters/enable_uring cd <libfuse-build-dir>/examples ./passthrough_hp --uring --uring-q-depth=128 <src-dir> <mnt-dir>

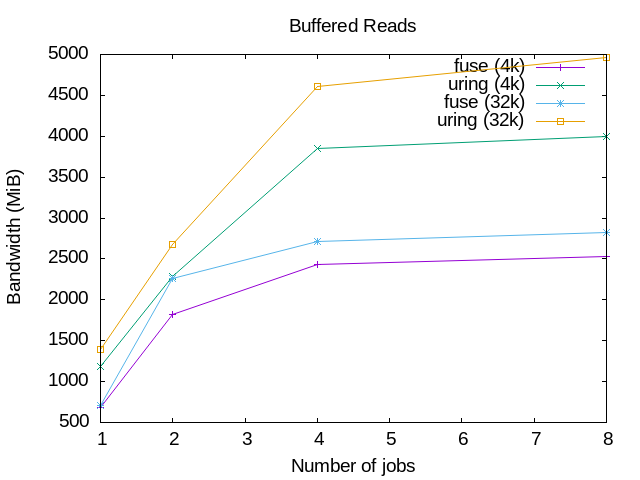

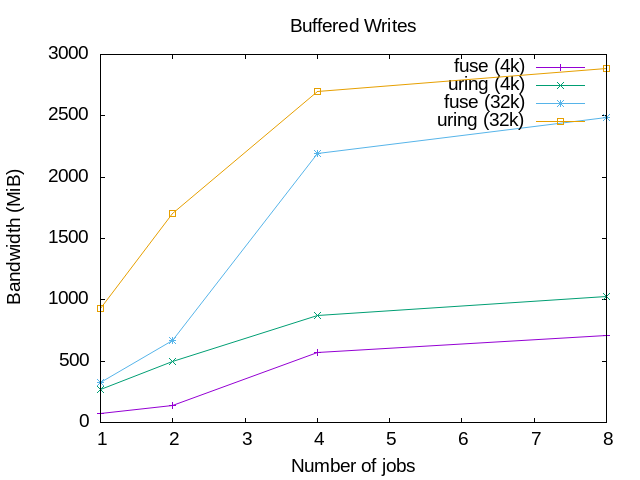

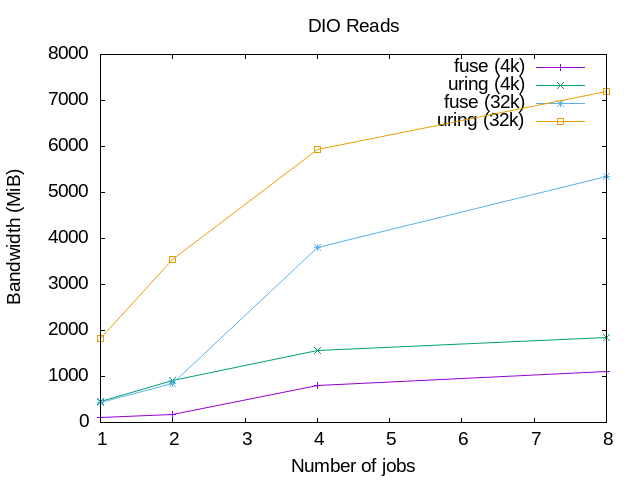

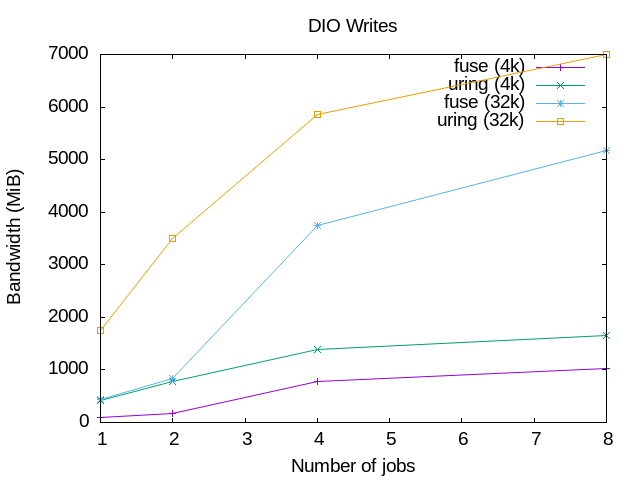

The graphics below show the results of running some very basic read() and

write() tests, using a simple setup with the passthrough_hp example file

system. The workload used was the standard I/O generator fio.

The graphics on the left are for read() operations, and the ones on the right

for write() operations; on the top the graphics are for buffered I/O and on

the bottom for direct I/O.

All of them show the I/O bandwidth on the Y axis and the number of jobs (processes doing I/O) on the X axis. The test system used had 8 CPUs, and the tests used 1, 2, 4 and 8 jobs. Also, for each operation different block sizes were used. In these graphics only 4k and 32k block sizes are shown.

| Reads | Writes |

|---|---|

|

|

|

|

The graphics show clearly that the io_uring performance is better than when

using the FUSE /dev/fuse device. For the reads, the 4k block size io_uring

tests are even better than the 32k tests for the traditional FUSE device. That

doesn't happen in the writes, but io_uring are still better.

Conclusion

To summarise, today is already possible to improve the performance of FUSE file

systems simply by explicitly enabling the io_uring communication between the

kernel and the FUSE server. libfuse still needs to be manually compiled, but

this should change very soon, once this library is released with support for

this new feature. And this proves once again that user-space file systems

are not necessarily "toy" file systems developed by "misguided" people.

Footnotes:

For example, /dev/fuse still needs to be used for the initial FUSE

setup, for handling kernel INTERRUPT requests and for NOTIFY_* requests.